Automatic Generation of Multi-core Stressmarks...

-

Upload

hoangkhuong -

Category

Documents

-

view

212 -

download

0

Transcript of Automatic Generation of Multi-core Stressmarks...

Wouter Kampmann, Lieven Lemiengre

Automatic Generation of Multi-core Stressmarks

Academiejaar 2009-2010Faculteit IngenieurswetenschappenVoorzitter: prof. dr. ir. Jan Van CampenhoutVakgroep Elektronica en informatiesystemen

Master in de ingenieurswetenschappen: computerwetenschappen Masterproef ingediend tot het behalen van de academische graad van

Begeleider: Stijn PolflietPromotor: prof. dr. ir. Lieven Eeckhout

Automatic generation of multi-core stressmarksWouter Kampmann and Lieven Lemiengre

Supervisor(s): Lieven Eeckhout, Stijn Polfliet

Abstract— This article describes a framework for the development ofplatform-portable stressmarks. Estimating the practical power and ther-mal characteristics of a processor is vital to evaluate power and thermalmanagement strategies, to examine hotspots that may damage the proces-sor or reduce the chip’s lifecycle, and to dimension cooling solutions andpower circuitry.The proposed framework makes it possible to automatically generate opti-mized stressmarks for almost any platform.

Keywords— stressmark, platform-independent, synthetic benchmark,portable, power dissipation

I. INTRODUCTION

IN the past few years, it has become apparent that power andthermal characteristics of a processor have become a first

class design constraint. For a long time, the maximum proces-sor power consumption increased by a factor of about two, ev-ery four years [4], [3]. This trend of course could not continueand it came to a halt around 2002, when the industry hit thepower wall. Power consumption and thermal dissipation couldnot increase any further and controlling the power and thermalcharacteristics became a first class design constraint, requiringattention at every stage of the microprocessor design flow.

Conventional benchmarks can be used to estimate the powerand thermal characteristics of a typical workload. However, theyare unsuitable for estimating the maximum power and operat-ing temperature characteristics[1]. It is important to analyse thepractical worst-case behavior of a processor.

The worst-case maximum power consumption and tempera-ture dissipation can be used to develop power and thermal man-agement strategies. Another application is using the worst-casebehavior to dimension the thermal package and the power sup-ply circuitry for the processor.

The current practice in the industry is to develop hand-craftedstressmarks. These stressmarks are developed by specialists thathave a very detailed knowledge of the microprocessor architec-ture. It is a very tedious and time-consuming job. The resultingstressmark is processor-specific, so this work has to be repeatedif the micro-architecture is modified.

We developed a framework to automate the creation of stress-marks. We based our work on the StressMarker framework thatcould automaticaly generate stressmarks for the Alpha 21264microprocessor architecture.[1] The key idea is to generate syn-thetic benchmarks based on an abstract workload description;a machine learing algorithm then optimizes this workload de-scription to induce certain thermal or power characteristics.

Our contributions:• Our aim is to make the framework platform-portable, and weachieve this by generating the synthetic benchmarks purely in Clanguage constructs. The resulting C program is compiled forthe target platform. This means that our framework is unawareof the underlying platform and the platform-specific details are

filled in by the compiler. This allows the framework to generatestressmarks for a very wide range of systems. We verified theresults for MIPS and x86-64 targets.• A stressmark is described by a number of abstract parame-ters, each determining an aspect of either the target platform,or the workload of the stressmark. We minimized the numberof platform-specific parameters and specialized this workloadmodel for generating stressmarks. We also extended the work-load model to support generating multi-threaded stressmarks.The result is a lean workload model, specialized for stressmarks,that uses less parameters than the StressMarker framework[1]—30 instead of 40—while offering more functionality.

II. FRAMEWORK WORKFLOW

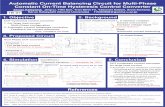

The framework workflow consists of four steps. We start witha workload description which is then transformed into a C stress-mark. The C stressmark is first compiled, and is then executedon a test platform. The measurements are fed into the machinelearning algorithm which generates an optimized workload, andthe cycle is complete. As a machine learning algorithm we usea genetic search algorithm.

OptimizationMeasurements(SESC / HPC)

Synthetic BenchmarkAbstract WorkloadModel

Fig. 1. Framework workflow.

III. DEVELOPING THE WORKLOAD MODEL

Stressmarks are described by two kinds of parameters: plat-form parameters and workload parameters. The platform pa-rameters are the number of hardware threads and the size of acache line. These parameters can easily be defined for any plat-form. The second type of parameters describes the workload ofthe stressmark. It is these parameters that the machine learn-ing algorithm will optimize. They were chosen based on theresearch by Joshi et al. [1]. These parameters describe a setof hardware-independent workload characteristics. We tried tominimize the number of parameters because less parameters re-sults in a smaller search space, allowing the machine learningalgorithm to work more efficiently.

A. Workload Parameters

The workload model consists of four major parts: the instruc-tion mix, the minimal dependency between instructions, the dataand instruction footprint, and the memory striding behavior.

A.1 Instruction Mix

This part consists of a high-level distribution of the propor-tions of arithmetic, memory, and branch instructions. Then foreach instruction type a more specialized distribution is defined.For arithmetic instructions, it is the distribution of each arith-metic operation defined by it datatype and numeric operation.Memory instructions are characterized as loads or stores, work-ing in shared or thread-local memory. For branch instructionstheir branch behavior is defined.

A.2 Minimum Dependency Distance

The dependency distance is the number of instructions be-tween two dependent instructions. Since we only work with out-of-order processors, we only considered the RAW (Read afterwrite) dependencies. Instruction dependencies limit the instruc-tion level parallelism, so this parameter is essentially a measureof the ILP.

A.3 Data and Instruction Footprint

The footprints are the number of unique data and instructionaddresses referenced while running the stressmark. The size ofthese will affect the stress on the memory subsystem, particu-larly the caches.

A.4 Memory Striding Behavior

Memory instructions in the stressmark may exhibit some dy-namic behavior. Some memory instructions read from or writeto the same address every time they are executed. Other mem-ory instructions walk through the memory, using a different ad-dress every time they are executed. We use data stream stridesto model this behavior.

IV. GENERATING C BENCHMARKS

We want the framework to be platform-portable, meaningthat, given the platform-dependent parameters, it should be ca-pable of generating stressmarks for almost any platform, with-out knowing the instruction set or register set. To achieve thisfeature, we use the low-level programming language C insteadof assembler to express the stressmark. Once the stressmark iscompiled for the target platform, we obtain an executable stress-mark.

One of the inherent difficulties of this approach is the optimiz-ing behavior of the compiler. Compilers are made to optimizeredundant code, loop invariants, etc., to increase the program’sperformance. However, when a stressmark is compiled, somecompiler optimizations could change characteristics reflectingthe workload model; these optimizations are undesired. Unfor-tunately, we cannot wholly disable optimization as we still relyon intelligent instruction selection and register allocation for ef-ficiency and correctness.

We addressed the compiler optimization problem using twoapproaches. First of all, we dumbed the compiler down to theminimum level of optimization, using a predefined optimizationlevel tweaked with special compiler flags. We then designed thestructure of a stressmark and made it immune to the remainingoptimizations of the dumbed-down compiler.

The resulting method is capable of generating effective stress-marks using C. The only significant drawback of our techniqueis the heavy register usage needed to maintain the minimum de-pendency distance. If the platform does not have enough hard-ware registers, there is a risk of register spilling.

V. RESULTS

A. Test Platforms

We used two platforms to verify the effectiveness of ourframework. First we set up a simulated SMP MIPS platform onwhich we optimize for maximal power usage. The other plat-form is a real world system: an Intel Core2 Quad processor thatwe optimized for maximum IPC; unfortunately, the optimiza-tion gets stuck at a local maximum (IPC ∼= 3) because it is notusing any memory instructions.

0

20

40

60

80

100

120

140

160

0 5

10

15

20

25

30

35

40

45

50

55

60

65

70

75

80

85

90

95

10

0

Po

wer

(W)

Generation

0

0,5

1

1,5

2

2,5

3

0 2 4 6 8 10 12 14 16 18 20 22 24 26 28

IPC

Generation

Fig. 2. Results: top: MIPS, bottom: x86-64.

VI. CONCLUSION

We showed that we can generate effective multi-threadedstressmarks using C as implementation language. Because ofthis, our framework for automated stressmark generation isplatform-portable.

REFERENCES

[1] Ajay M. Joshi, Lieven Eeckhout, Lizy Kurian John, and Ciji Isen. Auto-mated microprocessor stressmark generation. In HPCA [2], pages 229–239.

[2] 14th International Conference on High-Performance Computer Architec-ture (HPCA-14 2008), 16-20 February 2008, Salt Lake City, UT, USA. IEEEComputer Society, 2008.

[3] S H Gunther, F Binns, D M Carmean, and J C Hall. Managing the impact ofincreasing microprocessor power consumption. Intel Technology Journal,(1):2005, 2001.

[4] Herb Sutter. The free lunch is over: A fundamental turn toward concurrencyin software. Dr. Dobb’s Journal, 30(3):202–210, 2005.

PREFACE iv

Preface

During the past nine months, we have been looking tremendously forward to the completion

of this document you are now holding. We hope you enjoy reading it as much as we enjoyed

the experience of preparing and writing it.

In the first chapter, we introduce the concept of stressmarks and briefly discuss some impor-

tant trends and events motivating the subject of our master thesis.

The second chapter contains an overview of the workload model we defined with a description

of the different workload parameters it contains. We discuss the consequences of the design

choices we made and how they relate to the characteristics of a generated stressmark.

In the third chapter, the main component of our framework, the stressmark generator, is

explained. We take a closer look at how the workload model is transformed into an executable

synthetic benchmark.

Chapter four explains how we employed a genetic algorithm in order to turn synthetic bench-

marks into stressmarks, optimizing for an output characteristic such as power usage or the

number of instructions per cycle.

The fifth chapter combines the components described in the foregoing chapter, giving a high-

level overview of the entirety of our StressmarkRunner framework. The two different platforms

we set up are discussed in preparation of the next chapter.

In the sixth chapter, we elaborately discuss the different results we obtained from running the

framework on our two target platforms, and how we verified the correctness and performance

of our genetic algorithm and stressmark generator component.

Chapter seven concludes this document by providing a final overview and some closing remarks

on the work we did.

Acknowledgements

Producing a master thesis can be quite a daunting task. We would therefore like to thank all

those who have supported and guided us throughout this endeavor, especially our supervisors,

professor Lieven Eeckhout, and Stijn Polfliet.

Wouter Kampmann and Lieven Lemiengre, May 2010

USAGE RESTRICTIONS vi

Usage restrictions

”The authors give permission to make this master dissertation available for consultation and

to copy parts of this master dissertation for personal use.

In the case of any other use, the limitations of the copyright have to be respected, in particular

with regard to the obligation to state expressly the source when quoting results from this

master dissertation.”

Wouter Kampmann and Lieven Lemiengre, May 2010

CONTENTS vii

Contents

Preface iv

Usage restrictions vi

Acronyms ix

1 Introduction 1

1.1 Before the Power Wall . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Hitting the Power Wall . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.3 Consequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.4 Stressmarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2 The Workload Model 5

2.1 Stressmarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.2 Workload Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.3 Workload Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 Synthetic Benchmarks in C 15

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.2 Language and Compiler Requirements . . . . . . . . . . . . . . . . . . . . . . 15

3.3 Exploring the Optimization Behavior of the Compiler . . . . . . . . . . . . . 19

3.4 Interesting C Constructs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.5 Forming the Stressmark . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.6 Remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.7 Stressmark Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4 Stressmark Optimization 39

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.2 Genetic Search Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.3 Meta Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

CONTENTS viii

5 The Stressmark Runner Framework 44

5.1 Design Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

5.2 Platform Setups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

5.3 Framework Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

6 Results 66

6.1 Number of SESC Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

6.2 Exploration of Search Space . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

6.3 GA Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

6.4 GA Efficiency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.5 Theoretical Maximum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7 Conclusion 83

Bibliography 86

List of Figures 88

List of Tables 90

ACRONYMS ix

Acronyms

ACID Atomic Consistent Isolated Durability

ALU Arithmetic Logic Unit

API Application Programming Interface

BTB Branch Target Buffer

IDE Integrated Development Environment

ILP Instruction Level Parallelism

MDD Minimum Dependency Distance

RAW Read after Write

SIMD Single Instruction Multiple Data

WAR Write after Read

WAW Write after Write

XML Extensible Markup Language

YAML YAML Ain’t Markup Language

INTRODUCTION 1

Chapter 1

Introduction

For a long time, every four years the maximum power consumption of processors increased

by a factor of a little more than two. This evolution lasted about fifteen years from 1986

until 2002. Around 2002 things changed; processor manufacturers hit the power wall and

ever since, the power consumption of processors has only marginally increased.

1.1 Before the Power Wall

Before we look at the consequences of the power wall, let us look at the period pre-dating

it. For about fifteen years, processor manufacturers were able to increase the single-threaded

performance of their processors with about 50% every year [11]. How did they achieve this?

1. Moores law: The number of transistors that can be placed inexpensively in an integrated

circuit doubles about every two years. [13]

2. RISC processors: Using simple instructions that are easy to pipeline.

3. Out-of-order execution: Mining ILP in single-threaded workloads by employing specu-

lative execution.

4. Increasing the clock frequency: Requiring deeper pipelines

1.2 Hitting the Power Wall

This evolution came to an end around 2002. You could say that for a long time, power was

free and transistors were expensive, but now the situation had turned around [8]. What

happened?

1.3 Consequences 2

Figure 1.1: SPECint performance over the years (image source: [11]).

1. A higher clock frequency means more power consumption and heat dissipation, and

cooling solutions can only take you so far. Their cost increases exponentially with the

thermal dissipation [9].

2. ILP wall: Serial performance can be improved by mining more ILP but this requires

more speculative execution. The law of diminishing returns applies; at some point the

hardware cost to mine more ILP starts increasing exponentially. This is not beneficial

for the performance per watt.

3. Speed of light: It takes many clock pulses to transport data from one side of the chip

to the another.

1.3 Consequences

For the past few years, the power consumption and the clock frequencies have basically stayed

the same.

However, Moore’s law still applies, so the transistor budgets are still increasing at the same

speed. To improve performance, processor manufacturers are now focusing on multi-core

processors. If we look at the immediate future, we also see that more and more functionality

is being integrated into the CPU. For example, Intel and AMD are going to launch a CPU

with a GPU integrated on the die next year, memory controllers are already integrated on

most CPUs, and PCI-express controllers are also being integrated on some Intel processors.

1.4 Stressmarks 3

Figure 1.2: Power wall, frequency wall and ILP wall (image source: [14]).

It is clear that power and thermal characteristics of a processor are becoming increasingly more

important. They have become a first-class design constraint for high-performance processors

and should be considered at every stage of the microprocessor design flow.

1.4 Stressmarks

Conventional benchmarks can be used to estimate the power and thermal characteristics of

a typical workload. However, they are unsuitable for estimating the maximum power and

thermal characteristics [12]. There actually is an increasing disparity between the maximum

power consumption and the power consumed while running more typical applications [9]. This

growing difference in power consumption faces the system designer with a difficult problem.

The system should be designed to ensure the processor does not exceed the specified maximum

operating temperature, even if those circumstances are extremely rare.

1.4 Stressmarks 4

The worst-case behavior of a processor can be used for a number of applications:

1. Developing power and thermal management strategies for the processor. The processor

could reduce its frequency if it is in danger of overheating; this can reduce the cost of the

cooling solution. Since recently the opposite strategy can be applied as well. A multi-

core processor using only one core could over-clock that core to improve single-threaded

performance if the other cores are idle. [2]

2. Finding hot-spots: Hotspots are small regions on a chip that dissipate a large amount

of power for a short time. This localized overheating can reduce the lifetime of a chip,

cause timing problems, degrade circuit performance, and even cause chip failure.

3. The worst-case behavior can also be used to dimension the cooling solution and the

power supply circuitry for the processor.

Current practice in the industry is to develop hand-crafted stressmarks. These stressmarks

are developed by specialists that have a very detailed knowledge of the microprocessor ar-

chitecture. It is a very tedious and time-consuming job. Moreover the resulting stressmark

is processor-specific, so this work will have to be repeated if the processor architecture is

changed.

We believe the process of generating stressmarks can be automated. Our work is based on

the StressMarker framework [12] that could automatically generate stressmarks for the Alpha

21264. The key idea is to generate synthetic benchmarks based on an abstract workload

description. A machine learning algorithm then optimizes this workload to induce certain

thermal or power characteristics.

We have created a similar framework with a few new features:

� Our framework is platform-portable. We achieve this by generating synthetic bench-

marks purely in C language constructs.

� We developed a workload model that is specialized for stressmark generation.

� We can generate multi-threaded stressmarks that communicate through memory to

stress cache coherence protocols and inter-cache communication.

THE WORKLOAD MODEL 5

Chapter 2

The Workload Model

2.1 Stressmarks

Stressmarks are a kind of synthetic benchmarks especially constructed to stress a specific part

of the processor under test. In our case, these benchmarks are optimized using a machine

learning algorithm to induce certain power or thermal characteristics.

A stressmark is described by a number of abstract parameters, each determining an aspect of

either the target platform, or the workload of the stressmark. The synthetic benchmark gen-

erator processes these parameters and generates the stressmark’s code written in C language

constructs.

The first type of parameters describes the target platform, determining key properties of the

processor the stressmark is being developed for. We opted to keep the number of parameters

to a minimum in our framework, so we pick only cache line size and the number of hardware

threads as platform parameters. Note that these two generally apply to any processor.

The second type of parameters describes the workload of the stressmark. These are the

parameters that the machine learning algorithm optimizes in order to create an efficient

stressmark. We have chosen them based on prior research by Joshi et al [12], introduced

some simplifications, and extended the parameters to support multi-threaded stressmarks. In

the next section, we discuss these workload parameters in detail.

2.2 Workload Parameters

The workload model consists of four major parts: the instruction mix, the minimal depen-

dency between instructions, the data and instruction footprint, and the memory striding

behavior.

2.2 Workload Parameters 6

2.2.1 Instruction Mix

We start by defining a distribution describing the proportion of arithmetic, memory, and

branch instructions. For each of these general instruction types, we then define another

distribution, determining the relative frequencies of instructions of more specific subtypes

(e.g. integer addition, double multiplication, etc. for the arithmetic instructions).

Arithmetic Instructions

Arithmetic instructions take two operand registers, perform some operation on them and

store the result in a register. These instructions are characterized by an operation and the

data type of the operands. Our framework supports integer, and single and double precision

floating point as data types. The supported operations are addition, multiplication, and

division.

The relative frequencies of all arithmetic instructions that should be used in the stressmark

are combined in an arithmetic instruction profile. These instructions should stress the ALUs

responsible for arithmetic calculations in the processor.

Table 2.1: Example of an arithmetic instruction profile

Operation Datatype Relative frequency

Integer Add 20%

Integer Mul 20%

Integer Div 20%

Double Add 20%

Double Mul 10%

Double Div 10%

Memory Instructions

A memory instruction takes an address and either reads from that address and writes its value

to a register, or writes the register value to the address. The address used by the memory

instruction can point to a shared portion of the memory that is used by all threads in the

system, or a local portion of memory specifically allocated for the thread. Consequently, we

can define four kinds of memory operations in total: shared loads, shared stores, local loads

and local stores.

2.2 Workload Parameters 7

Stores and loads stress the ALUs responsible for memory operations, the store/load buffers,

and the memory system including caches. If the memory operation uses shared memory, it is

possible that the instruction causes extra inter-cache traffic. The amount of traffic depends

on the cache coherence protocol. If there is a lot of contention between processors, this will

cause stalls, negatively affecting the processor’s throughput.

The relative frequencies of all memory instructions are combined in the memory instruction

profile. The memory access pattern is not determined by this.

Table 2.2: Example of a memory instruction profile

Operation Shared? Relative frequency

Load Yes 10%

Store Yes 10%

Load No 40%

Store No 40%

Branch Instructions

There are two kinds of branch instructions: conditional and unconditional branches. Using

branch instructions, we want to stress the ALU responsible for branch processing, the branch

predictor, and other associated hardware structures (e.g. BTB).

To stress the branching-related logic, we need to control the predictability of a branch. We

can do this using the branch transition rate [10]. The branch transition rate is the number

of times a branch switches between taken and untaken, divided by the number of times the

branch is executed.

For example, a branch transition rate of 100% means that the branch will constantly alternate

between taken and untaken. Branch transition rates that are very high (90-100%) or very

low (0-10%) have a high predictability. If the branch transition rate is between 30 and 70

percent, the branching behavior is harder to predict.

In the workload model we use the cumulative distribution of the inverse branch transition

rate.

2.2 Workload Parameters 8

Table 2.3: Example of a branch transition rate distribution

Inverse branch transition rate Relative frequency

1 70%

2 20%

4 5%

8 5%

2.2.2 Dependencies Between Instructions

If an instruction has to wait for the result of a previous instruction, there is a dependency.

The dependency distance is the number of instructions between two dependent instructions.

There are three kinds of instruction dependencies: WAW (Write after Write), RAW (Read

after Write), and WAR (Write after Read). Since out-of-order processors can eliminate WAW

and WAR dependencies, we will only consider RAW dependencies.

Instruction dependencies limit the instruction level parallelism (ILP). In a stressmark we want

the ILP to be large enough to fully occupy all ALUs in the processor. We will therefore define

a minimum RAW distance in the workload model.

2.2.3 Data and Instruction Footprint

The footprints are the number of unique data and instruction addresses referenced while

running the stressmark. The size of these will affect the stress on the memory subsystem,

particularly the caches.

The size of the instruction footprint determines whether the stressmark fits into the L1 in-

struction cache. The data footprint is twofold, defining the size of the global memory region

on the one hand, and of the private memory region allocated for every thread on the other.

The size of the global memory determines the contention probability when multiple threads

read from it or write to it.

In the workload model, we define the three parameters discussed above: the number of

instructions, the size of the global memory, and the size of the thread-local memory.

Table 2.4: Example of a data and memory footprint

Instruction footprint 400 instructions

Shared data footprint 32kB

Thread-local data footprint 512kB

2.3 Workload Summary 9

2.2.4 Memory Striding Behavior

Memory instructions in the stressmark may exhibit some dynamic behavior. Some memory

instructions read from or write to the same address every time they are executed. Other

memory instructions walk through the memory, reading from a different address every time

they are executed. We will use data stream strides to model this behavior.

Memory instructions that walk through the memory do this with a constant step size, the

stream stride. The size of the stream stride is a multiple of the size of a cache line (defined in

the platform-dependent parameters). Every memory instruction is assigned a stream stride.

In the workload model, we define a distribution of stream strides. Memory instructions that

constantly read from the same address have a stream stride of size zero. Instructions with a

stream stride greater than zero will cycle through a part of the memory defined in the data

footprint.

Table 2.5: Example of a stream stride distribution

Stride value Relative frequency

0 80%

1 10%

2 2%

4 6%

8 2%

2.3 Workload Summary

In total, there are 30 variables in the workload model. While developing the workload model,

we tried to minimize the number of parameters, keeping only those relevant to developing

stressmarks. A workload model containing less parameters results in a smaller search space,

allowing the machine learning algorithm to work more effectively.

Each parameter is designed to stress a specific part of the processor. Although the parameters

may interact with each other, each parameter has one specific goal, avoiding these overlaps

as much as possible. For example, we could support more arithmetic operations (shifts or

2.4 Discussion 10

subtractions) but we decided against this because we assume that we can optimally stress

all ALUs with the provided operations, rendering any more operations superfluous. If this

assumption would turn out to be false, our framework is set up in a way that operations can

be added with relative ease.

While developing the workload model, we also had to take into consideration that it eventually

will be converted into a stressmark. To be able to efficiently generate the stressmark code,

we defined some extra restrictions on some parameters. For example, the inverse branch

transition rate is restricted to powers of two. We will explain this more in detail in the next

chapter.

Table 2.6: Workload summary

Category # parameters

Instruction mix 3

Arithmetic instruction distribution 9

Memory instruction distribution 5

Branch transition rate 4

Inter instruction dependency 1

Footprint 3

Stream stride distribution 5

2.4 Discussion

2.4.1 Differences With Prior Work

In previous work by Joshi et al [12], stressmarks have been created based on a workload

model made to create synthetic equivalents of real benchmarks. This workload model was

composed of 40 parameters and had no support for multi-threaded workloads, nor was it

platform-portable. Our own workload model is based on this, but we would like to highlight

a few key differences:

� In the original stressmark paper, the dependency distance was a cumulative distribution.

To create stressmarks it suffices to define a minimum dependency distance.

� We do not define a basic block size in our model.

� Because our benchmark is platform-portable, we cannot make assumptions about the

latency of arithmetic operations. We define three operations: addition, multiplication,

and division, for three datatypes (integers, floats, and doubles).

2.4 Discussion 11

� We reduced the number of parameters to describe the stream stride distribution and

the branch transition rate distribution, because we found that this relatively coarse

granularity suffices for the generation of stressmarks.

� We added support for multi-threaded stressmarks by introducing operations on shared

memory, affecting stressmark performance due to coherency.

2.4.2 Platform Portability

In a perfect world, we would be able to produce a completely platform-independent stressmark

that could be used to stress any given processor to its absolute limit. To approach this limit

in practice however, a stressmark needs to optimally stress as many processor components as

possible, and often exploit the specifics of the plaform it is running on. It is clear that this

unfortunately renders stressmarks platform-dependent by their very nature.

Because full-fledged platform independence is not possible, our goal becomes maximal plat-

form portability; we design our framework making sure that it can generate stressmarks for

a wide range of platforms, and that the adoption of new platforms is relatively easy.

Our workload model is an excellent starting point in the achievement of this ambition, since

its abstract parameters can be applied to most platforms and new platforms can often be

easily supported by adding a few new parameters.

The next step is to generate the executable stressmark without breaking platform portabil-

ity. To achieve this, a widely supported low-level programming language, in our case the C

language, is used instead of assembler to express the stressmark (as in previous work). Using

a compiler for the target platform, we then obtain the executable stressmark. The framework

itself therefore does not know the instruction set or register set; it only needs a compiler sup-

porting the platform. The wide availability of compilers for different platforms thus ensures

platform portability.

We learnt however that our approach is not without limitations, as the following example

shows. To fully stress a modern processor we would have to support SIMD instructions in

the workload model. Supporting SIMD instructions is however problematic because there

is no standardized way to express them in C. We will explore a couple of possible solutions

further in this document, but unfortunately it is not possible to implement them in a way

that platform portability is absolutely guaranteed.

2.4 Discussion 12

2.4.3 Branch Predictability

The performance characteristics of the branch predictor component, such as power usage and

heat production, are determined by the number of branch instructions on the one hand and

the miss prediction rate on the other; it is therefore of crucial importance that our framework

can influence these two properties through the workload model.

For the number of branch instructions, this is trivial since this property directly corresponds to

the workload parameter determining the instruction footprint. For the branch predictability

however, there is no such workload parameter since it is not possible to accurately generate

code with a specific branch miss prediction rate. We will however show that the branch miss

prediction rate can be controlled indirectly by setting the branch transition rate. The latter

is indeed a workload parameter as it is perfectly possible to generate synthetic code with a

given branch transition rate.

In the paper ”Branch Transition Rate: A New Metric for Improved Branch Classification

Analysis”, Haungs, Sallee, and Farrens [10] found that the branch miss prediction rate for

global as well as local branch predictors is determined by their transition rate and taken rate

(i.e. the number of times the branch is taken.) Due to design decisions about the workings

of our stressmark generator, the taken rate of our branches is a fixed 50%, and the transition

rate is 100%, 50%, 25%, or 12.5%.

Using the following graphs from Haungs et al., it can be deduced that the corresponding miss

prediction rates range from the lowest values (<5%, white) to the highest (>45%, black) with

two evenly spread intermediates.

Figure 2.1: Miss rates of local (left) and global (right) branch predictors for different classes

of branches, identified by transition rate and taken rate.

2.4 Discussion 13

On the axes of these graphs, class 0 corresponds to 0-5%, class 1 to 5-10%, ..., class 4 to

20-25%, class 5 to 25%-75%, class 6 to 75%-80%, ..., class 9 to 90%-95%, and class 10 to

95%-100%. The values for our stressmarks with a fixed branch transition rate of 50% are

therefore located in the middle column, which contains widely varying values.

2.4.4 Transformation Aspects

We now draw attention to the various aspects of the transformation of the workload model into

the executable stressmark, which is performed by the stressmark generator. First, we need

to distinguish clearly between the workload model itself, which is the input of the stressmark

generator, and the effective workload. The latter contains the actual workload parameter

values of the generated stressmark when it is executed.

Randomized vs. Deterministic Stressmark Generation

Although the workload model defines the key characteristics of the stressmark that needs to be

generated, there are some aspects that are not explicitly determined by it; notable examples

are the taken branch rates discussed in the previous section, and the order of instructions.

During stressmark generation, these undefined aspects can in general either be determined at

random, or by reasonable design choices. If determined by design choice, the transformation

process will always produce the same stressmark for a given workload, but the number of

stressmarks that can possibly be created is reduced, and it cannot necessarily be guaranteed

that the design choice always produces the best stressmark possible. If chosen randomly,

the transformation process is no longer deterministic and a single workload model can then

generate different stressmarks during sequential runs of the stressmark generator.

Although we initially opted to determine some of the undefined aspects randomly, it became

clear later on that this was the wrong choice. The effective workloads produced by different

stressmarks based on the same workload model varied too much, causing the search algorithm

described further in this document to function inefficiently, since a given workload model no

longer corresponded to a single fitness value. Concluding that a deterministic transformation

is really necessary, we switched later on and tweaked our design choices for best performance.

We also looked at the theoretical maxima of our search results to guard the efficacy of the

framework.

Mapping Between Workload Model and Effective Workload

Note first that different workload models may sometimes result in the same stressmark and

therefore the same effective workload. For example, the instruction footprint may be 50 in-

2.4 Discussion 14

structions while the number of memory operations is only 1%. In this case the framework will

generate zero memory instructions, which is of course the same stressmark as the one created

for the same workload model, but with 0% memory operations instead of 1%. Moreover,

since there are no memory instructions at all, additional memory parameters in the workload

model become irrelevant (i.e. data footprint, stride distribution, reads/writes, shared/non-

shared). Workload models differing only in these parameters will once again result in the

same stressmark.

It is now also clear that the effective workload is not always consistent with the workload

model. This is not only caused by duplicate mappings as illustrated by the example above, but

also by certain complexities within the stressmark generator algorithm. These are described

in the next chapter.

Multi-threading Aspects

The multi-threaded stressmarks are created from a single workload. This means that every

core will be running the same synthetic benchmark. The interaction between threads will

happen at the memory level. There are two situations of contention between threads. First,

threads may be competing over cache line ownership; in this case we stress the cache coherency

mechanisms. Second, contention may happen if threads compete for cache memory; this will

be the case if the size of the global memory combined with all the thread-local memory is

bigger than the total cache size.

We did not implement synchronization primitives such as mutexes in the stessmarks. These

primitives will typically cause the processor to stall for a while. Stalling is undesired behavior

for stressmarks.

SYNTHETIC BENCHMARKS IN C 15

Chapter 3

Synthetic Benchmarks in C

3.1 Introduction

In the previous chapter we described the workload model, a collection of program character-

istics that describe a stressmark. In this chapter we will examine how the workload model

can be transformed into an executable stressmark.

We want the framework to be platform-portable, meaning that it should be capable of gener-

ating stressmarks for almost any platform given the platform-dependent parameters without

knowing the instruction-set or register-set of the platform. To achieve this feature, we use

a low-level programming language instead of assembler to express the stressmark. Once the

stressmark is compiled for the target platform, we obtain an executable stressmark.

This is why our framework doesn’t have to know the platform it is testing; it only needs a

compiler that supports it.

3.2 Language and Compiler Requirements

We will start with defining the criteria for the low-level programming language. First, compi-

lation should be static; interpreted or JIT compiled languages will not do. Second, it must be

possible to express the various workload properties in the language constructs. And finally, a

high quality compiler should be available for almost any platform.

As low-level programming language we therefore chose the C programming language. It is so

well supported that it is the de facto standard among the low-level programming languages.

Virtually every platform has a C compiler and most have a highly optimized one.

3.2 Language and Compiler Requirements 16

3.2.1 Alternatives

An alternative language to C could be Fortran; it is less supported but it fits all the other

criteria perfectly. We opted for C in favor of Fortran mainly because we have more experience

with it.

Note that in the end, the language choice may not matter all that much, since compilers like

the GNU Compiler Collection support many languages and use the same backend for every

language, making it quite unlikely that using another language will yield significantly better

or worse results. In fact the low-level language used is nothing more than an interface to

control the backend of the compiler for platform-specific code generation.

An alternative approach could be to skip the compiler frontend altogether and directly im-

plement the stressmark in the intermediate representation (IR) of the compiler. We could for

example use GCC’s GIMPLE/TUPLES or LLVM’s IR. Both compiler frameworks support a

lot of platforms but not each one. Some specialized embedded processors (such as Trident

media processors or microchip PIC processors) only have commercial compilers.

Using the compiler’s IR to express the stressmark would improve our control over the form

and properties of the stressmark. To implement the stressmark in C, we have to go through

significant efforts to make sure that the critical stressmark properties are preserved after

compilation. Taking the extra effort to implement the stressmarks in C results in a significant

portability advantage.

3.2.2 Expressing the Stressmark in C

Expressing the stressmark in C is quite simple. The language constructs allow us to easily

express all the behavior we want. In the following example 3.1 we illustrate the general form

of a stressmark. Beware that this a very naive implementation of a stressmark, only for the

purpose of example.

Before we start running the stressmark, we need to perform some initialization. The initial-

ization contains some variable declarations and the memory allocation.

The next part is the stressmark loop, consisting of a start block and the stressmark body. In

the start-block the next iteration of the stressmark body is prepared, and it is checked whether

the stressmark has finished. The stressmark body contains the actual behavior conform to

the workload model. It is important to note that there are no loops inside the stressmark

body.

Finally, in the finalization routine we free the allocated memory.

3.2 Language and Compiler Requirements 17

Figure 3.1: Global stressmark structure.

3.2.3 Compiling the C Stressmark

The role of the compiler in our framework is to fill in the platform-specific details. The com-

piler should perform optimal instruction selection and register allocation for the underlying

platform. At the same time, the compiler may not change the execution properties of the

stressmark as they are expressed in the low-level programming language.

The example may look like a perfectly working stressmark but after compilation, the result is

very disappointing. If we compile this example, we notice that the variables v1 and v2 have

disappeared. The branch operation and the arithmetic operations have been eliminated as

well. This is because they are functionally redundant; they do not contribute to the result

of the function,f nor do they generate any effect. We also notice that the stride calculation

checks for division-by-zero, which is not needed since memSize will always be greater than

zero.

Listing 3.1: Compilation result with -O1

3.2 Language and Compiler Requirements 18

s t r i d e 3 = ( s t r i d e 3 + 12) % memSize ;

400548: addiu v0 , s0 , 12

40054 c : div zero , v0 , s2

400550: bnez s2 ,40055 c <s t re s smark+0x4c>

400554: nop

400558: break 0x7

40055 c : mfhi s0

i f ( i−− == 0) break ;

400560: addiu s1 , s1 ,−1

400564: beq s1 , v1 ,400578 <s t re s smark+0x68>

400568: s l l v0 , s0 , 0 x2

v2 = v3 + v2 ; // a r i t hme t i c i n s t r u c t i o n

i f ( i & 2) v1 = v1 * v3 ; // branch i n s t r u c t i o n

memory [ s t r i d e 3 ] = v3 ; // memory i n s t r u c t i o n

40056 c : addu v0 , v0 , a0

400570: j 400548 <s t re s smark+0x38>

400574: sw s3 , 0 ( v0 )

This brings us to the disadvantages of using a low-level programming language as imple-

mentation target for stressmarks. While the compiler is very good at converting a program

into machine code, it will also optimize the program. For normal applications, optimizing is

of course beneficial as it eliminates unnecessary operations without changing the functional

behavior. However, when a stressmark is compiled, some optimizations could change char-

acteristics reflecting the workload model; these optimizations are undesired. Unfortunately,

we cannot wholly disable optimization as we still rely on intelligent instruction selection and

register allocation for efficiency and correctness.

We want to stress that the optimization tradeoff is very tricky to get right. If the generated

code is not optimized, the result is inefficient as the available registers and instructions are

not optimally utilized. If the compiler performs too much optimization, critical parts of the

stressmark may be optimized away, changing the stressmark behavior and jeopardizing its

conformity to the workload model.

In the remainder of this chapter, we will mainly focus on how to tune the compiler and

the structure of the stressmark to generate correct executable stressmarks. Before analyz-

ing the optimization countermeasures, we define which optimization behavior is required, or

acceptable.

3.3 Exploring the Optimization Behavior of the Compiler 19

3.2.4 Compiler Requirements

The compiler is required to perform two tasks: instruction selection and register allocation.

The workload is only correctly expressed in the executable stressmark if the arithmetic and

memory operations use registers. Stack operations and register spilling should be avoided as

much as possible.

The compiler is allowed to do some instruction rescheduling. The workload does not define

the instruction ordering; only the minimum dependency distance is defined. If the compiler

performs some instruction rescheduling, the minimum dependency distance could possibly

change. Such optimizations are not considered harmful. The compiler may have good enough

knowledge about the latencies of instructions to be able to reschedule instructions without

causing a slowdown. Instruction rescheduling across blocks is however not acceptable.

It is also the responsibility of the compiler to optimize the address calculation for memory

instructions.

3.3 Exploring the Optimization Behavior of the Compiler

We addressed the compiler optimization problem using two approaches. First of all, we

dumbed the compiler down to the minimum level of optimization using a predefined opti-

mization level, tweaked with special compiler flags. We then designed the structure of a

stressmark and made it immune to the remaining optimizations of the dumbed-down com-

piler.

From this point our results are dependent on the used compiler and the platform. We use the

GCC 4.4 for verifying the x86-64 target and GCC 3.4 for verifying the SESC/MIPS target.

3.3.1 Configuring the Compiler

GCC has six optimization levels: O0, O1, O2, O3, Os, and Ofast. The lowest optimization

level O0 is not useful since it does not perform any register allocation; from O1 onwards it

does. We fine-tuned the O1 profile a bit more using flags to disable some loop optimizations.

Table 3.1: Used compiler flags

GCC 3.4 -fno-loop-optimize -mno-check-zero-division -fnew-ra -fno-if-conversion -fno-if-conversion2

GCC 4.4 -fno-tree-loop-optimize -fno-if-conversion -fno-if-conversion2

3.3 Exploring the Optimization Behavior of the Compiler 20

Through extensive trial and error we sought to find a combination of flags that sufficed to

reliably compile stressmarks. The counter-optimization methods used in the next part rely

on these options.

This is a fragile part of our famework; if a new compiler is used, the user will have to configure

that compiler to fit our stressmark method. If the compiler cannot be configured correctly,

there are two solutions. One way is to change how stressmarks are generated by the framework

based on the behavior of the new compiler. The other solution is to change the framework

component that generates C into a component that generates assembler, thus giving up on

platform portability.

If you can configure the compiler correctly, you can generate stressmarks almost without any

customization. On top of that, gcc already supports many compilation targets and these

require no changes at all.

3.3.2 Analyzing Compiler Optimizations

In this section we will investigate the optimizations of the dumbed-down compiler and their

effects on the quality of the stressmark.

Redundant Code Elimination

Redundant operations are operations that do not contribute to the result of the function or

do not produce an effect, such as writing to memory.

Redundant functions

Table 3.2: Redundant functionC Assembler (MIPS)

void function(){int i,v1=2,v2=3,v3=7,v4=5;

for(i = 0; i<100; i++){v1 = v3 * v4; Completely eliminated

v2 = v2 - v4;

v1 = v2 / v4;

}}

The function in this example only contains dead code. Even the dumbed-down compiler will

completely eliminate this function.

3.3 Exploring the Optimization Behavior of the Compiler 21

Table 3.3: AlternativesAlternative 1 Alternative 2

int function(){ void function(){int i,v1=2,v2=3,v3=7,v4=5; volatile int effect;

for(i = 0; i<100; i++){ int i,v1=2,v2=3,v3=7,v4=5;

v1 = v3 * v4; for(i = 0; i<100; i++){v2 = v2 - v4; v1 = v3 * v4;

v1 = v2 / v4; v2 = v2 - v4;

} v1 = v2 / v4;

return v1 + v2 + v3; }} effect = v1 + v2 + v3

}

There are two ways to prevent the function from being completely eliminated. In the first

alternative we make the return value dependent on the variables that are used. In the second

alternative we use a volatile variable to generate an effect. The volatile variable is a variable

that is always stored in memory, never in a register. Writing the sum of the used variables

to memory makes it visible for other threads to see, thus preventing the variables from being

optimized away.

Redundant operations Now that the function is not completely optimized away, we turn

our attention to the loop inside the function that represents a stressmark body.

Table 3.4: Redundant operations

C Assembler (MIPS)

for(i = 0; i<100; i++){ for(i = 0; i<100; i++){40051c: move v1,zero

v1 = v3 * v4; v1 = v3 * v4;

v2 = v2 - v4; 400520: subu a2,a2,a0 -> v2=v2-v4;

400524: addiu v1,v1,1

400528: slti v0,v1,100

40052c: bnez v0,400520 <main+0x10>

v1 = v2 / v4; 400530: div zero,a2,a0 -> v1=v2/v4;

} }

3.3 Exploring the Optimization Behavior of the Compiler 22

After compilation, we can see that the compiler has eliminated one of the operations in the

loop body. The first and the third operation write to the same variable while this variable is

never read between these two writes. In other words, the first operation is redundant. This op-

timization behavior has significant ramifications for implementing the minimum dependency

distance.

Listing 3.2: Dependency distance

. . .

v1 = v1 * v2 ; // v1 Write

v2 = v2 / v3 ;

v3 = v3 + v4 ;

v4 = v4 * v5 ;

v5 = v4 / v1 ; // v1 Read

. . .

The minimum dependency distance is defined as the minimum RAW distance. In the above

example the RAW distance is four. To achieve this distance without redundant operations,

we had to use five variables. If we were to increase the minimum dependency distance, the

required number of variables would increase proportionally.

Since we want all the variables to be stored in registers for optimal execution, a large minimal

RAW distance will require a lot of hardware registers. If there are not enough hardware

registers available, this will cause register spills. Not only is this undesired behavior, it is

also impossible for the framework to detect this happening. This is a limitation caused by

the use of a low-level programming language. If we implemented the benchmark directly

in assembler, it would be a lot easier to achieve very large RAW distances with only a few

hardware registers. In fact, we would probably drop the minimum dependency distance from

the workload model.

In listing 3.5 we look at the redundancy elimination in combination with conditional instruc-

tions, commonly known as ”partial redundancy elimination.” After compilation, the super-

fluous conditional expression is completely eliminated by the dumbed-down compiler. This

means that the redundancy removal even works across blocks within the loop.

Conclusion This is by far the most annoying optimization and to our knowledge there is

no way to prevent the compiler from applying it by tweaking the compiler flags. Whenever

we enable register allocation, the compiler will try to eliminate the most obvious unnecessary

instructions.

3.3 Exploring the Optimization Behavior of the Compiler 23

Table 3.5: Redundant blocksC Assembler (MIPS)

for(i = 0; i<100; i++){ for(i = 0; i<100; i++){40051c: move v1,zero

if(i == 10) v1=v3*v4; if(i == 10) v1=v3*v4;

v1 = v3 * v4; v1 = v3 * v4;

v2 = v2 - v4; 400520: subu a2,a2,a0 -> v2=v2-v4;

400524: addiu v1,v1,1

400528: slti v0,v1,100

40052c: bnez v0,400520 <main+0x10>

v1 = v2 / v4; 400530: div zero,a2,a0 -> v1=v2/v4;

} }

Loop Invariants

The body of the stressmark is placed inside a loop. A typical compiler optimization is to

hoist loop invariants out of the loop. To avoid this optimization through the structure

of the stressmark, we would have to make sure that all variables within the loop are de-

pendent on a previous iteration of the loop. This would add a lot of complexity to the

stressmark generator. Fortunately we were able to avoid this by setting the compiler-flag

”-fno-tree-loop-optimize”.

Table 3.6: Loop invariants

C Assembler (MIPS)

for(i = 0; i<100; i++){ for(i = 0; i<100; i++){400518: move a0,zero

v1 = v3 * v4; 40051c: mult a2,a1 -> v1 = v3 * v4;

400520: addiu a0,a0,1

400524: slti v0,a0,100

400528: bnez v0,40051c <main+0xc>

v2 = v3 + v4; 40052c: addu v1,a2,a1 -> v2=v3+v4;

} }

In the example (table 3.6) both instructions are loop-invariant. However if we look at the

compilation result, we can see that they are still inside the loop. The multiplication (v1 =

3.3 Exploring the Optimization Behavior of the Compiler 24

v3*v4) is placed at the beginning of the loop. The addition (v2 = v3+v4) is placed in the

branch delay slot of the branch instruction.

We conclude that we needn’t worry about loop invariants in the body of the stressmark.

Constant Folding and Propagation

These optimizations are present in the dumbed-down compiler, but because of the

”-fno-tree-loop-optimize” flag, they do not work on literals declared outside the loop.

These optimizations come in handy because they optimize some of the address calculation for

memory operations inside the stressmark loop. However, there are some cases where these

optimizations may cause trouble when combined with algebraic simplifications. Listing 3.3 is

a reduced problem case we encountered while testing the framework.

Listing 3.3: Constant folding and propagation

[ . . . ]

v1 = v2 / v2 ; // v1 = 1 −> e l im ina t ed ( a l g e b r a i c s im p l i f i c a t i o n )

[ . . . ]

v3 = v1 * v1 ; // v3 = v1 = 1 −> e l im ina t ed ( cons tant f o l d i n g and prop . )

v5 = v1 + v1 ; // v5 = 2 −> e l im ina t ed ( cons tant f o l d i n g and prop . )

[ . . . ]

v6 = v3 / v5 ; // v5 = v3 >> 1 −> peepho le op t im i za t i on

[ . . . ]

To avoid these optimizations, we came up with a few extra rules for generating the stressmark.

� All variables are initialized with different values.

� Instructions with two operands use two different registers as operand.

Code Layout and Branch Optimization

The compiler eliminates branches whenever it can, and by doing so also removes unreachable

code. These optimizations make it harder to implement static branches in the stressmark.

3.3 Exploring the Optimization Behavior of the Compiler 25

Table 3.7: Branch optimization

Unoptimized Optimized

if(condition) goto L3; else goto L2; if(condition) goto L3;

L2: [...] L2: [...]

L3: [...] L3: [...]

Unoptimized Optimized

[...] [...]

goto L3 // eliminated

L2: [...] // noting jumps to this block // eliminated

L3: [...] L3: [...]

In listing 3.4 we show a solution to implement static branches in the stressmark. The for-

loop represents the stressmark body. In the finalization routine, we jump to the block that

otherwise would be eliminated.

Listing 3.4: Static branch implementation

for ( i = 0 ; i<MaxIter ; i++) { // s tressmark body

[ . . . ]

goto L3 ;

L2 : [ . . . ]

L3 : [ . . . ]

}goto l 2 ; // f i n a l i z a t i o n rou t ine

Instruction Rescheduling

Most instruction rescheduling optimizations only become available with the -O2 optimization

level. This optimization is allowed as long as it doesn’t work across blocks. In practice we

rarely see an instruction rescheduling optimization in the compiled code.

Exceptions

While compiling divisions and modulo operations for the MIPS target, the compiler will gener-

ate code that checks for division-by-zero. By using the compiler flag ”-mno-check-zero-division”

we can disable this safety.

3.4 Interesting C Constructs 26

3.4 Interesting C Constructs

Before we use all the knowledge we gained about the optimization behavior of the compiler

to implement a good stressmark, we look at some interesting language constructs in C that

may help constructing stressmarks.

3.4.1 Volatile Variables

Volatile variables in C are variables that may change in a way that is not predictable by

the compiler. Volatile variables are typically used to implement signal handlers, or to access

memory mapped devices.

The volatile keyword prevents the compiler from storing the variable content in a register;

this means that writing to a volatile variable always results in writing to a static memory

address, and reading from a volatile variable always results in reading from a static memory

address.

Since the content of the memory is modified, these operations are effectful and cannot be

optimized away.

3.4.2 Const Variables

The value of const variables cannot be changed after initialization. Typically the const

keyword does not improve performance at a sufficiently high optimization level, since the

compiler can figure out if a variable will be modified or not. The crippled compiler however,

does need this information to improve the address calculation for memory instructions.

3.4.3 Control Flow

While researching how we could implement the flow control in the stressmark, we found many

alternatives. Some examples:

3.5 Forming the Stressmark 27

Table 3.8: Alternative control flow implementations

Alternative 1 Alternative 2

while( i > 0 ) { start:

i--; if(i <= 0) goto end;

if( condition1 ) { i--;

a = b + c; if(!condition1) goto L1;

} a = b + c;

c = a * b; L1:c = a * b;

if( condition2 ) { if(!condition2) goto L2:

d = e + f; d = e + f;

} L2:f = e * d;

f = e * d; goto start;

} end:

In the example both alternatives are functionally equivalent and they compile to exactly the

same machine code. We opted to use gotos because they are more flexible; only gotos allow

us to implement unoptimizable static branches (listing 3.4).

3.5 Forming the Stressmark

We will explain the structure of the stressmarks in three stages. We start with only arithmetic

instructions, then add memory operations, and finally control flow to the stressmark.

3.5.1 Arithmetic Operations

The first stressmark is a simple loop containing only arithmetic operations. Stressmarks

such as this one can be generated by the framework by providing a workload model with an

instruction mix existing a 100% out of arithmetic instructions.

The stressmark starts by initializing the used variables (vN), the loopcounter (i) and a division

variable (vDiv). The division variable is created to avoid division-by-zero problems. The next

part of the stressmark is the stressmark loop with a very small start block. The stressmark

body contains the arithmetic operations and it finishes by jumping back to the start block.

When the stressmark has finished, it returns the sum of all the variables to avoid optimization

(table 3.3.2).

3.5 Forming the Stressmark 28

We simplified this example by using only integer operations. More typical stressmarks will use

a combination of integer and floating point operations. This stressmark contains one dynamic

branch instruction for the loop even though this is not defined in the workload model.

Listing 3.5: Arithmetic instructions

int s t re s smark ( ) {int i = 999999 , v1 = 2 , v2 = 3 , v3 = 5 , v4 = 7 , v5 = 13 ;

const int vDiv = 3 ;

s t a r t :

i f ( i−− <= 0) goto end ; // s t a r t b l o c k

v1 = v2 + v3 ; // i n t add

v2 = v3 / vDiv ; // i n t d i v

v3 = v4 * v5 ; // i n t mul

v4 = v5 + v1 ; // i n t add

v5 = v1 / vDiv ; // i n t d i v

[ . . . ]

goto s t a r t ;

end :

return v1+v2+v3+v4+v5 ;

}

The number of registers a stressmark uses is critical to avoid register spilling. Register usage

breakdown:

� Necessarily stored in registers

– Loop counter (i): 1

– Variables (vN): Mininum dependency distance + 1

� Optionally stored in registers

– Division variable (vDiv): One register for every datatype

How the compiler compiles the division variable depends on the number of available registers.

Sometimes it is loaded as a literal before the division; if not, the variable will continuously

be kept in a register.

3.5.2 Memory Instructions

To support memory instructions we need to declare thread-local (localMemoryArray) and

shared (globalMemoryArray) memory regions. These regions are split into smaller arrays,

3.5 Forming the Stressmark 29

one for each striding memory instruction.

We assign each striding memory instruction a dedicated array to avoid memory instructions

influencing each other’s behavior; more specifically, we want to avoid memory instructions

causing data of another memory instruction to be cached. The performance of a memory

instruction should after all be independent of other instructions.

To implement the striding behavior of the memory instructions, we use the variables lStrideN

for local and sStrideN shared memory instructions, with N equaling the stride distance. These

variables are incremented with the stride value in the start block of the stressmark (= stride

distance * length cache line). A striding memory instruction will read from its array with an

offset defined by lStrideN or sStrideN.

Non-striding memory instructions are a lot easier to implement; we can simply use volatile

variables (sCel1, lCel1, lCel2) to this effect.

Implementing memory instructions causes the initialization and finalization block to grow a

bit, but this of course does not affect the stressmark. The start block has grown as well and

it now contains some expensive modulo operations that will be executed at the beginning of

every iteration. If the stressmark body is sufficiently large, those operations shouldn’t have

an effect on the performance.

The implementation of non-striding memory instructions is very cheap. They read from or

write to an address equal to a static offset from the stack pointer, and can be executed in

a single instruction. Striding memory instructions require some address calculation. They

could be implemented more efficiently if every instruction in the loop had a dedicated hardware

register to store the address. However, since our implementation is already constrained by

the number of registers, we opted not to implement it in this way.

Listing 3.6: Arithmetic + memory instructions

volat i le int sCel1 ;

int s t re s smark ( int * const globalMemoryArray ) {int i = 999999 , v1 = 2 , v2 = 3 , v3 = 5 , v4 = 7 , v5 = 13 ;

const int vDiv = 3 ;

int * const localMemoryArray = mal loc ( . . . ) ;

int * const lArr1 = &localMemoryArray [ 0 ] ;

int * const lArr2 = &localMemoryArray [ 1 0 0 ] ;

int * const lArr3 = &localMemoryArray [ 2 0 0 ] ;

int * const sArr1 = &globalMemoryArray [ 0 ] ;

volat i le int lCel1 , lCe l2 ;

3.5 Forming the Stressmark 30

int l S t r i d e 2 = 0 , l S t r i d e 4 = 0 , s S t r i d e 2 = 0 ;

s t a r t :

i f ( i−− <= 0) goto end ; // beg in s t a r t b l o c k

l S t r i d e 2 = ( l S t r i d e 2 + 2*4) % 100 ;

l S t r i d e 4 = ( l S t r i d e 4 + 4*4) % 100 ;

s S t r i d e 2 = ( s S t r i d e 2 + 2*4) % 150 ; // end s t a r t b l o c k

v1 = v2 + v3 ; // i n t add

v2 = lArr1 [ l S t r i d e 2 ] ; // memread s t r i d e=2

v3 = v3 / vDiv ; // i n t d i v

lArr2 [ l S t r i d e 2 ] = v4 ; // memwrite s t r i d e=2

v4 = lCe l1 ; // memread s t r i d e=0

v5 = lArr3 [ l S t r i d e 4 ] ; // memread s t r i d e=4

lCe l2 = v1 ; // memread s t r i d e=0

v1 = v2 / vDiv ; // i n t d i v

[ . . . ]

goto s t a r t ;

end :

f r e e ( localMemoryArray ) ;

return v1+v2+v3+v4+v5 ;

}

The number of registers used by the implementation has significantly increased.

Register usage breakdown:

� Necessarily stored in registers

– Loop counter (i): 1

– Variables (vN): Mininum dependency distance + 1

– Stride offsets (lStrideN and sStrideN): 1 for every used stride distance for shared or

thread-local memory instructions—e.g. 4 used stride distances for shared memory

instructions and 2 for thread-local memory instructions means a total of 6 required

registers.

– Address of the localMemoryArray and globalMemoryArray: 2 registers

� Optionally stored in registers

– Division variable (vDiv): One register for every datatype

3.5 Forming the Stressmark 31

The variables localMemoryArray and globalMemoryArray should be stored in registers to

prevent them from being loaded before a striding memory instruction is executed.

The number of required registers is increased by a maximum of ten. Typically there will

only be a few striding memory instructions in a stressmark, so the actual number of extra

registers is lower. It is important to note that the non-striding memory instructions require

no registers because of the use of volatile variables.

3.5.3 Branch Instructions

The final step is to include branch instructions, which will cause some instructions to be exe-

cuted conditionally. Note first that conditional execution is not allowed for every instruction

type. Striding memory instructions must be executed each iteration because their offset is

calculated at every iteration; if they are not executed there will be a gap in their path.

We want to implement the branch behavior as cheaply as possible in terms of register usage.

We reuse the loop counter to calculate if a branch will be taken or not. We use the lowest bits

of the loop counter. The first bit will constantly alternate, forming the pattern ...101010...,

which corresponds to an inverse branch transition rate of 1. The pattern of the second bit is

...110011001100..., corresponding to an inverse branch transition rate of 2, etc. This makes

it very simple to implement branch instructions conform to the workload model as long as

the inverse branch transition rates are a power for two. However, these branches are highly

regular and therefore very predictable. This is not so bad because we typically want very

predictable branches in a stressmark to reduce the stalling probability. A more advanced

implementation of branch transition rates can be found in the remarks (listing 3.10); note

that it uses a lot more registers though.

Using branches also has an effect on the minimum dependency distance. To preserve the

minimum dependency distance across multiple branch instructions, we have to take all possible

paths into account; this results in more registers being used.

Because of this, we reduce the number of instructions in a conditional block to a single instruc-

tion. It would be reasonable to think that the compiler optimizes the very small conditional

blocks, replacing them by conditional moves. However, we can disable this optimization using

the flags -fno-if-conversion and -fno-if-conversion2.

The example is simplified for better readability. The static branch implementation (figure

3.4) was omitted.

Listing 3.7: Arithmetic + memory + branch instructions

// i b t r means ” inv e r s e branch t r a n s i t i o n ra t e ”

3.5 Forming the Stressmark 32

volat i le int globalMemoryCel ;

int s t re s smark ( int * const globalMemoryArray ) {int i = 999999 , v1 = 2 , v2 = 3 , v3 = 5 , v4 = 7 , v5 = 13 ;

const int vDiv = 3 ;

int * const localMemoryArray = ( int *) mal loc ( . . . ) ;

int * const lArr1 = &localMemoryArray [ 0 ] ;

int * const lArr2 = &localMemoryArray [ 1 0 0 ] ;

int * const lArr3 = &localMemoryArray [ 2 0 0 ] ;

volat i le int lCel1 , lCe l2 ;

int s t r i d e 2 = 0 , s t r i d e 4 = 0 ;

s t a r t :

i f ( i−− <= 0) goto end ;

l S t r i d e 2 = ( l S t r i d e 2 + 2*4) % 100 ;

l S t r i d e 4 = ( l S t r i d e 4 + 4*4) % 100 ;

v1 = v2 + v3 ; // i n t add

v2 = lArr1 [ l S t r i d e 2 ] ; // memread s t r i d e=2

i f ( i & 2) // branch i b t r=2

v3 = v3 / vDiv ; // i n t d i v

lArr2 [ l S t r i d e 2 ] = v4 ; // memwrite s t r i d e=2

v5 = lArr3 [ l S t r i d e 4 ] ; // memread s t r i d e=4

i f ( i & 3) // branch i b t r=4

v4 = lCe l1 ; // memread s t r i d e=0

lCe l2 = v1 ; // memread s t r i d e=0

v1 = v2 / vDiv ; // i n t d i v

i f ( i & 3) // branch i b t r=4

v2 = v3 * v4 ;

[ . . . ]

goto s t a r t ;

end :

f r e e ( localMemoryArray ) ;

return v1+v2+v3+v4+v5 ;

}

3.5 Forming the Stressmark 33

Register usage breakdown:

� Necessarily stored in registers

– Loop counter (i): 1

– Variables (vN): Mininum dependency distance + (MindepDist * fraction branch

instructions) + 1

– Stride offsets (lStrideN and sStrideN): 1 for every used stride distance for shared or

thread-local memory instructions—e.g. 4 used stride distances for shared memory

instructions and 2 for thread-local memory instructions means a total of 6 required

registers.

– Address of the localMemoryArray and globalMemoryArray: 2 registers

� Optionally stored in registers

– Division variable (vDiv): One register for every data type

All that remains to be done on this point, is to start the stressmark threads.

Listing 3.8: Starting stressmarks

void * runstressmark ( void * ptr ) {s t re s smark ( ( int * const ) ptr ) ;

}

int main ( int argc , char** argv ) {int t0 , t1 , t2 , t3 ;

globalMemoryArray = ( int *) mal loc ( . . . )

s e s c i n i t ( ) ;

sesc spawn ( ( void *) * runstressmark , ( void *) globalMemoryArray ,NULL) ;

sesc spawn ( ( void *) * runstressmark , ( void *) globalMemoryArray ,NULL) ;

sesc spawn ( ( void *) * runstressmark , ( void *) globalMemoryArray ,NULL) ;

sesc spawn ( ( void *) * runstressmark , ( void *) globalMemoryArray ,NULL) ;

s e s c w a i t ( ) ;

s e s c e x i t ( 0 ) ;

return 0 ;

}

The main method allocates the global memory and starts as many threads as there are

hardware threads. The threading implementation is platform-specific; for the MIPS target

we use a simulator specific library (SESC threads), and for the x86 target we use PThreads.

3.6 Remarks 34

While designing the stressmark form, we mainly focus on avoiding compiler optimization and

minimizing the register usage to ensure the ILP can be maximized.

3.6 Remarks